The Generative UX Manifesto

A vision for building adaptive, interactive, and intelligent user experiences with AI.

Generative AI has reached the point where two paths have formed:

- Generative Content: systems create text, images, video and other static content

- Generative UI: systems generate basic interactive websites and apps.

Both represent meaningful progress. But neither has reached the bar set by today’s non-AI-generated consumer products. Products that users trust, return to, and rely on in everyday contexts.

As AI becomes embedded in decision-making, learning, and exploration, interaction cannot stop at producing outputs. We need systems that shape how interaction unfolds over time. We need systems that generate experiences.

We call this frontier Generative UX.

What Is Generative UX?

Generative UX is the capability of an AI system to build and evolve a user’s experience in real time, based on context (what we know about the user) and intent (what the user wants to do).

Unlike Generative UI (which just focuses on what interface is rendered), Generative UX focuses on how interaction behaves and evolves over time. It encompasses the full experiential layer of an AI system, including:

- Intent Modelling: how user wants are inferred and met

- Structure: how information is expressed in the ideal format for this user

- Sequencing: how information is grouped, ordered, and revealed

- Affordance: which actions are available and suggested at each moment

- Mutability: how the system adapts to user input

- Convergence: how the experience guides the user toward an end result

- Latency: how quickly the system responds and adapts

Generative UX is not a rendering problem. It is a systems problem, a reasoning problem, and an interaction-design problem. It sits at the intersection of model orchestration, dynamic UI composition, state and memory management, user intent modelling, UX semantics, content accuracy and safety, and performance constraints.

Generative UX does not remove people from the creative process. Human intent, judgment, and expression remain essential to building experiences that resonate. What Generative UX replaces is not creativity, but friction: enabling systems that apply structure, constraints, and real-time intelligence so human intent can be expressed more clearly, not overwritten.

Generative UX is the missing layer that turns intelligence into experience.

Why Generative UI Alone Falls Short

Generative UI systems are powerful, but they are optimised for interface creation rather than experience evolution. This leads to three structural limitations.

1. One-shot interaction models: most systems generate a complete interface as a single response, freezing assumptions about user intent. When that interface is regenerated and redeployed on the next turn, continuity is broken, flow is disrupted, and the experience resets instead of evolving.

2. Latency mismatch: generation times measured in tens of seconds are incompatible with consumer expectations, where meaningful interaction must begin within a few seconds. Slow first interaction breaks momentum before it starts.

3. No experience-level optimisationCurrent systems optimise for technical or visual correctness such as producing valid JavaScript or HTML, or matching a given layout or mock, rather than for experiential outcomes like clarity, momentum or actionability. Interfaces may be structurally correct while the experience still fails.

Generative UI is an important foundation. Generative UX is the discipline that turns AI capability into usable, delightful, everyday products.

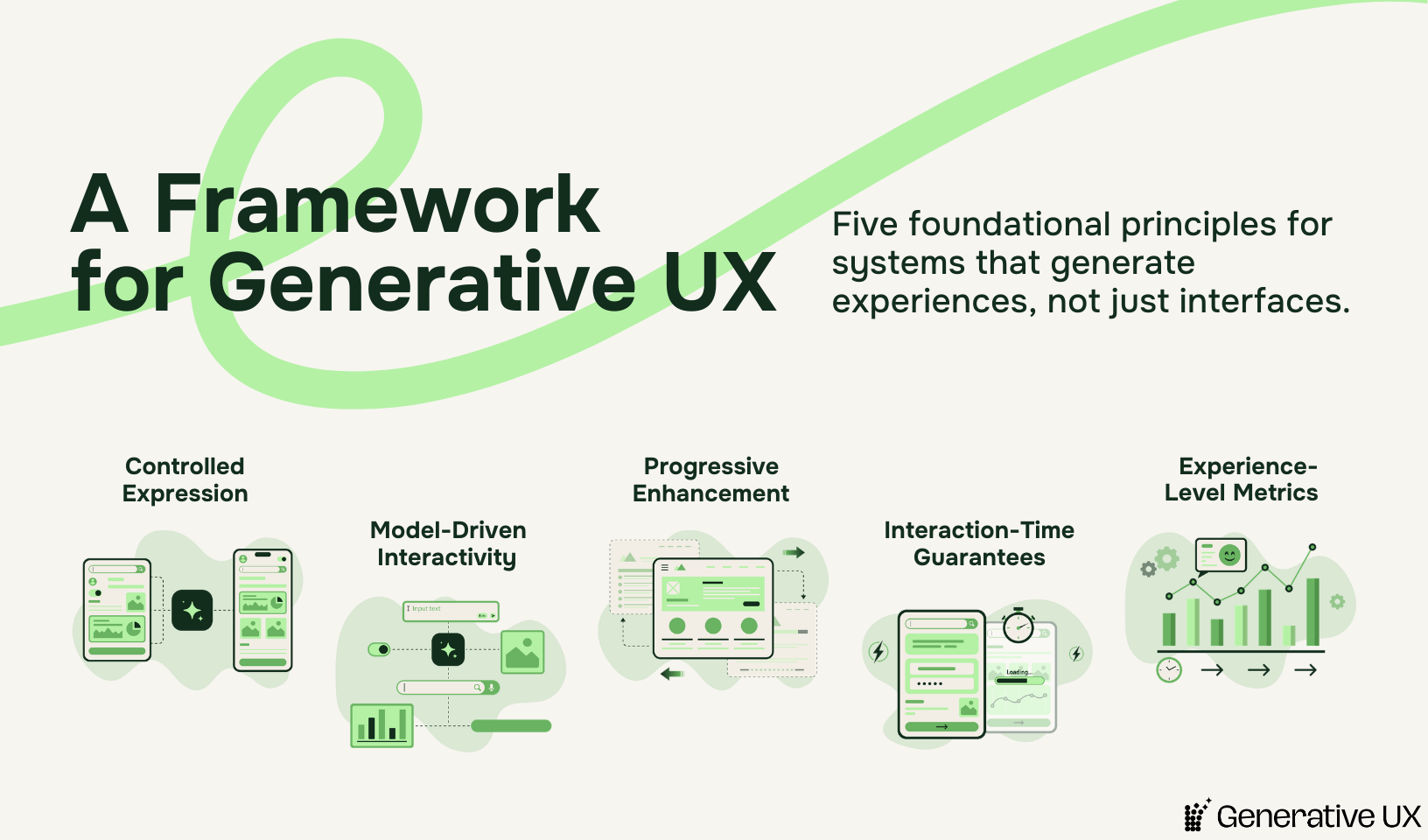

A Framework for Generative UX

We propose five foundational principles for systems aiming to deliver Generative UX.

1. Controlled Expression

Generative UX requires a curated, extensible set of components with known semantics and behaviour. Constraints are not a limitation. They enable clarity, consistency, accessibility, and safety at scale.

2. Model-Driven Interactivity

Generative UX combines the expressive flexibility of language with the usability of structured interfaces. Free-form input is interpreted by the model, but interaction is guided through explicit affordances. Every user action should be interpretable by the system and capable of triggering reasoning, adaptation, or expansion.

3. Progressive Enhancement

Generative UX systems control the detail and timing of information. Content is broken down and revealed progressively, so users see only what is useful at each step. Depth and complexity are offered, but not forced.

4. Interaction-Time Guarantees

Generative UX systems must meet strict interaction-time budgets. Feedback to interactions must occur within milliseconds, and meaningful responses within seconds. Experiences must support streaming responses rather than waiting for completion.

5. Experience-Level Metrics

Generative UX systems must be evaluated on how they evolve over time, not just on accuracy of initial output. This includes subjective measures like flow, trust and utility as well as objective measures like latency and quantity.

From Principles to Practice

This framework is not theoretical.

At Heywa Labs, we are building a consumer AI product while holding ourselves to these constraints in production. Doing so has forced a set of concrete trade-offs: prioritising responsiveness over completeness, designing for evolution rather than one-shot generation, and constraining expression to enable speed, clarity and trust.

Working this way means giving up some of the apparent freedom of unconstrained generation in exchange for experiences that remain usable, intelligible, and convergent. It also means treating interaction state as the primary unit of design, rather than pages, prompts, or static outputs.

We include this not to claim ownership of the idea, but to demonstrate that Generative UX is a buildable discipline, not just a conceptual one. The challenges it addresses are real, and so are the trade-offs required to make it work.

Generative UX is not a proprietary paradigm. It is a frontier and an emerging discipline whose shape will be defined collectively.

An Invitation to the Ecosystem

Progress will require collaboration between AI researchers, interaction designers, front-end and systems engineers, UX theorists, cognitive scientists, product builders, accessibility advocates, and safety and ethics specialists.

We invite the community to help define shared language and standards, best practices and patterns, meaningful metrics, evaluation frameworks, benchmarks, and open research questions.

Let’s build this next interface layer together.